The intersection of rapidly evolving technology and political discourse has flung open a new arena of legal battles, particularly concerning the Legal Landscape: Copyright, Deepfakes, and AI in Political Contexts. We're living in an era where distinguishing fact from fiction is becoming harder, thanks to sophisticated synthetic media. This isn't just about fun filters; it's about the very integrity of our political processes and the trust we place in what we see and hear.

This guide dives deep into the complex, often fragmented, legal responses to deepfakes and AI's role in shaping political narratives. We’ll explore what laws exist, where the gaps lie, and how this evolving landscape impacts campaigns, candidates, and ultimately, you.

At a Glance: Navigating the New Digital Frontier

- Deepfakes Defined: Synthetic media that falsely depict identifiable people saying or doing things they never did, created using advanced AI.

- No Single Federal Law: The U.S. lacks a comprehensive federal law for deepfakes; regulation is a patchwork of state laws and adapted federal statutes.

- Intent is Key: Most laws target deepfakes with deceptive intent, requiring proof of intent to deceive, injure, or defraud.

- Safe Harbors: Content clearly labeled as satire or parody often enjoys legal protection.

- Intimate Deepfakes: The federal TAKE IT DOWN Act specifically criminalizes nonconsensual intimate deepfakes, mandating platform removal.

- Political Context: State laws address deepfakes used to interfere with elections, often requiring disclosure and penalizing distribution close to an election.

- Legal Lag: The law struggles to keep pace with technology, particularly in political speech, where First Amendment protections create a "legal gray zone."

- Future Focus: Emerging solutions involve mandatory disclosure, platform accountability, and a harmonized federal baseline, all while safeguarding free speech.

The Deepfake Deluge: What We're Up Against

Imagine seeing a prominent political figure delivering a speech, only to find out later that every word, every gesture, was a sophisticated fabrication. This isn't science fiction anymore; it's the reality of deepfakes. These are synthetic media, engineered with advanced artificial intelligence (AI), designed to falsely portray an identifiable person saying or doing something they never actually did. While some deepfakes can be harmless fun or artistic expressions, their malicious uses are far more concerning, particularly in the realm of politics.

Deepfakes pose a significant threat to our democratic processes. They can be deployed for election interference, to spread disinformation, to commit fraud, or even to harass individuals. The power of AI allows for the material alteration of a person’s appearance or actions, making the synthetic content incredibly convincing and likely to deceive a reasonable observer. This deceptive intent is often the trigger for legal accountability, shifting the focus from simply creating the content to how and why it's distributed.

The ease of creating and disseminating these fakes means that trust in visual and audio evidence, once a cornerstone of public discourse, is rapidly eroding. As you might expect, the legal system is scrambling to catch up.

The Legal Maze: Fragmented Responses to a United Threat

One of the biggest hurdles in combating malicious deepfakes is the lack of a cohesive, comprehensive federal law in the United States. This isn't a surprise given the speed of technological advancement versus the deliberate pace of legislation. Instead, the current legal landscape is a fragmented collection of state statutes, often supplemented by adapted federal laws that weren't originally designed for AI-generated deception.

Think of it like trying to patch a leaky roof with a dozen different-sized buckets. Each state might have its own approach, leading to a patchwork of regulations that can be confusing for victims and difficult to enforce across state lines. Existing federal statutes, such as the Computer Fraud and Abuse Act (CFAA) or wire fraud statutes, have been creatively applied to deepfake cases, but they often require specific types of harm or intent that don't always align perfectly with the nuances of synthetic media.

Accountability for deepfakes generally hinges on two critical factors: deceptive intent and material alteration. This means the content must significantly change a person's appearance or actions, and it must be likely to deceive a reasonable person. Crucially, most laws require proof of an intent to deceive, injure, or defraud. This "intent" clause is a high bar, often making prosecution challenging.

However, the law isn't blind to artistic expression. Many of these statutes include "safe harbors" for content that is clearly labeled as satire or parody. This distinction is vital for protecting free speech, ensuring that comedic or artistic uses of synthetic media aren't inadvertently stifled by laws aimed at malicious deception. The challenge, of course, is drawing a clear line between art and fraud, especially in a politically charged environment.

When Intimacy Is Invaded: The TAKE IT DOWN Act

While the broader deepfake landscape struggles with comprehensive federal regulation, one specific and egregious form of synthetic media has received direct federal attention: nonconsensual intimate deepfakes. These are digital forgeries depicting individuals in intimate visual depictions without their consent, a deeply invasive and harmful act.

Recognizing the severe emotional distress, reputational damage, and psychological harm caused by such content, Congress passed the Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act, more commonly known as the TAKE IT DOWN Act, in 2025. This landmark federal law directly criminalizes the knowing production or distribution of such "digital forgeries."

The penalties for violating the TAKE IT DOWN Act are substantial. Adults found guilty face federal criminal penalties, including fines and incarceration of up to two years, while offenses involving minors can lead to up to three years in prison. Beyond criminalizing the act itself, the TAKE IT DOWN Act also places significant responsibility on covered online platforms. These platforms are now mandated to establish a notice-and-removal process, requiring them to remove reported intimate deepfake content within 48 hours. This swift action is designed to mitigate the rapid and widespread harm these images can cause.

This specific federal legislation demonstrates a clear societal and legal consensus against the nonconsensual weaponization of AI for intimate visual depictions, providing a strong legal recourse for victims in a particularly vulnerable area.

Deepfakes on the Ballot: Protecting Elections

The political arena is arguably where deepfakes pose their most immediate and widespread threat, capable of swaying public opinion, undermining campaigns, and even influencing election outcomes. Consequently, many state laws have specifically targeted the use of deepfakes to interfere with political campaigns and elections.

These state statutes zero in on synthetic media that falsely depict political candidates. Their primary goal is to prevent voter deception and ensure electoral integrity. To achieve this, many jurisdictions now require clear disclosure or labeling for political advertisements that utilize synthetic content. This aims to empower voters with the knowledge that what they are seeing or hearing might not be entirely authentic, allowing them to critically evaluate the information.

The timing of a deepfake's distribution in an election cycle is often a critical factor. Knowingly distributing a deceptive deepfake within a specific window—typically 30 to 120 days before an election—can trigger severe civil or criminal penalties. This legislative strategy acknowledges that misinformation spread close to an election has the greatest potential to cause irreparable harm to a candidate or the democratic process itself, leaving little time for correction or rebuttal.

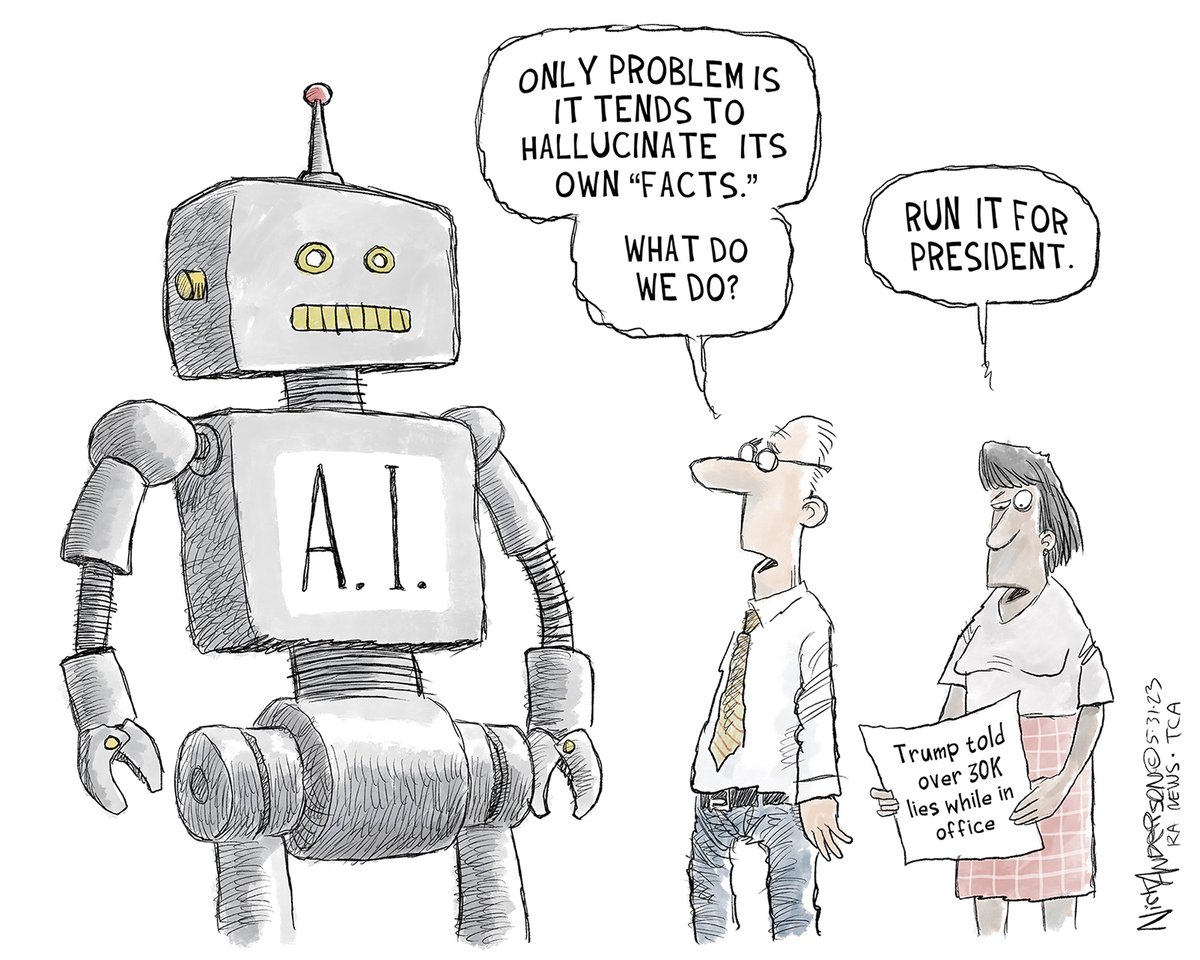

Consider the potential for a fabricated video showing a candidate making a controversial statement just days before an election. The damage could be done before the truth ever surfaces. It’s this kind of scenario that these state-level prohibitions aim to prevent. If you're looking at how generative AI is already being used to create images and messages that mimic political figures, it underscores just how pressing these legal questions are. You might want to Explore the Trump AI generator to see how easily such content can be created and how important it is to have legal guardrails.

These laws represent a crucial step towards creating a more transparent and trustworthy political information environment, though they face significant challenges, as we'll explore next.

Seeking Justice: Remedies for Deepfake Victims

When someone falls victim to a malicious deepfake, whether it's an intimate forgery or a politically damaging fabrication, the law offers several avenues for redress. These remedies fall into two main categories: criminal and civil.

Criminal penalties are often reserved for the most severe cases, particularly those involving deceptive intent or specific harms. The federal TAKE IT DOWN Act, for instance, provides for substantial fines and terms of incarceration, up to two years for adults and three years for minors, as previously mentioned. Some state laws, particularly those treating deepfake distribution as a felony, can also carry hefty fines (e.g., up to $15,000) and multi-year prison sentences. These penalties reflect society's condemnation of such acts and serve as a deterrent.

Civil remedies, on the other hand, focus on compensating victims for their losses and preventing further harm. Victims can typically seek monetary damages for a range of impacts, including:

- Emotional distress: The psychological toll of being falsely depicted in a harmful way.

- Reputational harm: Damage to one's public image, career, or personal standing.

- Financial losses: Tangible costs such as lost income, legal fees, or expenses incurred to mitigate the damage.

Beyond monetary compensation, civil actions can also secure injunctive relief. This is a court order compelling the removal and cessation of dissemination of the harmful content. For a deepfake victim, getting the fabricated content taken down and preventing its further spread is often paramount to mitigating ongoing damage. This legal tool can be powerful in halting the viral spread of misinformation or harmful imagery.

The combination of criminal prosecution and civil recourse offers a dual-pronged approach to hold perpetrators accountable and provide a path to recovery for victims. However, the unique challenges of attribution and the rapid global dissemination of digital content can make both types of legal action complex and demanding.

The First Amendment Tightrope: Navigating Free Speech

One of the most significant complexities in regulating deepfakes, particularly in political contexts, is the First Amendment's robust protection of free speech. This constitutional cornerstone creates a "legal gray zone" where laws designed to combat deepfake deception can clash with protected political expression.

Political speech, even if misleading or potentially false, generally enjoys strong constitutional protection in the United States. This makes courts inherently wary of laws that attempt to ban or heavily restrict it without extremely narrow tailoring. The concern is that overly broad legislation could inadvertently "chill" legitimate political discourse, satire, or criticism, leading to a stifling effect on public debate.

We've already seen state-level regulations, such as those in California and Minnesota, face legal challenges rooted in First Amendment concerns. These challenges argue that the laws are too broad, infringe on political expression, or fail to adequately distinguish between harmful deception and protected speech. Courts often apply strict scrutiny to laws that touch upon core political speech, requiring the government to demonstrate a compelling state interest and that the law is narrowly tailored to achieve that interest, using the least restrictive means possible.

This means that a sweeping ban on all deepfakes, or even all "deceptive" deepfakes, would likely struggle to survive constitutional scrutiny. The path forward, therefore, is not through broad prohibitions but through carefully crafted regulations that distinguish between malicious intent and protected forms of expression. This necessitates clear exemptions or "safe harbors" for content that is clearly identifiable as satire or parody, ensuring that legitimate artistic and comedic uses of synthetic media are not inadvertently criminalized. The balance is delicate: protecting the integrity of political discourse without undermining the fundamental right to free speech.

Future-Proofing the Legal Framework: Emerging Solutions

The current legal landscape, a patchwork of state laws and adapted federal statutes, is clearly lagging behind the rapid progression of deepfake technology. As election cycles loom and AI capabilities expand, the urgency to develop more resilient and effective legal frameworks intensifies. Scholars and policymakers are actively exploring several emerging policy models to address this gap.

One conceptual approach is to view deepfakes through the lens of existing legal concepts. Depending on the intent and content, a deepfake could be conceptualized as fraud (if it aims to deceive for financial or political gain), libel (if it's a false and damaging written statement), or defamation (if it's a false and damaging spoken statement). This allows for the application of established legal principles, albeit often requiring adaptation to the digital, synthetic nature of deepfakes. Critically, these proposals also advocate for clear exemptions or "safe harbors" for satire and parody, reinforcing the First Amendment considerations.

Beyond conceptual reframing, several concrete policy models are gaining traction:

- Mandatory Disclosure or Labeling Requirements: This approach requires content creators or distributors to clearly label synthetic media as "AI-generated" or "altered." The idea is to provide transparency, allowing consumers to make informed judgments.

- Tradeoffs: This relies heavily on accurate detection technology, which itself is an arms race against deepfake creation. It also places the burden on creators, who might circumvent such rules.

- Increased Platform Accountability: This model shifts some responsibility to the online platforms where deepfakes are distributed. It could involve stricter content moderation policies, faster takedown procedures (similar to the TAKE IT DOWN Act's requirements for intimate deepfakes), or greater transparency regarding algorithmic amplification of synthetic content.

- Tradeoffs: While potentially effective, this risks private censorship, where platforms, in an abundance of caution, might over-remove content that is legitimate or falls into a gray area, potentially chilling free speech.

- Creation of Federal Baseline Standards: Given the fragmented state-level responses, there's a strong argument for a harmonized federal baseline. This would establish minimum national standards for deepfake regulation, preventing a confusing mosaic of laws and ensuring consistent protections across jurisdictions.

- Tradeoffs: Any federal rules would need to be narrowly tailored enough to survive constitutional scrutiny, especially regarding political speech. Crafting such precise legislation is a monumental task.

The path forward is unlikely to involve sweeping bans that could stifle innovation or legitimate expression. Instead, the consensus leans towards a combination of these approaches: narrowly tailored disclosure requirements, robust safe harbors for parody and satire, and a harmonized federal baseline. The implementation of these measures without inadvertently chilling core political expression will be a significant test for lawmakers, courts, and online platforms alike. The stakes are high; continued legal lag risks further eroding public trust in political communication and the fundamental information ecosystem.

Navigating the Digital Minefield: What Citizens and Campaigns Can Do

In this evolving digital landscape, understanding the legal frameworks is one thing; navigating the practical challenges is another. For citizens and political campaigns alike, proactively engaging with the reality of deepfakes and AI is no longer optional.

For Citizens:

- Cultivate Media Literacy: While the law strives to protect you, your first line of defense is a critical eye. Question suspicious content, especially if it seems too outrageous or perfectly aligns with a pre-existing bias.

- Look for Disclosures: Pay attention to any labels indicating content is "AI-generated" or "synthetic." As laws around disclosure become more prevalent, this will be an increasingly important cue.

- Verify Information: Cross-reference unusual claims or visually striking content with reputable news sources and official campaign channels.

- Understand Reporting Mechanisms: If you encounter nonconsensual intimate deepfakes, remember the TAKE IT DOWN Act provides a federal avenue for recourse, requiring platforms to act quickly. Knowing how to report such content is crucial for victims and allies.

- Advocate for Stronger Laws: Educate yourself on proposed legislation and contact your representatives to support narrowly tailored, constitutionally sound regulations that protect against malicious deepfakes without stifling free speech.

For Political Campaigns: - Transparency is Paramount: If your campaign uses AI for legitimate purposes (e.g., content generation, voter outreach), consider clear disclosures. This builds trust and insulates you from accusations of deception.

- Rapid Response Planning: Develop strategies for how to respond if your candidate or campaign becomes the target of a deepfake attack. This includes legal counsel, public relations messaging, and technical verification.

- Legal Counsel: Consult legal experts to understand the specific deepfake laws in the jurisdictions where you operate, especially regarding election timelines and disclosure requirements. Ignorance of the law is not a defense.

- Monitoring and Detection: Invest in tools and expertise to monitor for the creation and dissemination of deepfakes targeting your campaign or candidate. Early detection is key to mitigation.

- Ethical AI Use: Set clear ethical guidelines for any AI tools your campaign utilizes. The political context demands an even higher standard of care and transparency when leveraging these powerful technologies.

The responsibility to maintain a healthy information environment in the age of AI isn't solely on lawmakers; it's a shared endeavor. By being informed, critical, and proactive, citizens and campaigns can play a vital role in upholding democratic integrity against the backdrop of synthetic media.

The Road Ahead: Building Trust in a Synthesized World

The journey through the Legal Landscape: Copyright, Deepfakes, and AI in Political Contexts reveals a dynamic, often uncertain, terrain. We've seen the law's valiant attempts to catch up with technological leaps, from applying existing statutes to crafting targeted federal legislation like the TAKE IT DOWN Act. Yet, the core challenge remains: how to effectively regulate sophisticated AI-generated deception, especially within the highly protected sphere of political speech, without inadvertently silencing legitimate expression.

The future of this legal landscape is unlikely to be marked by sweeping bans, which courts would likely reject as overly broad. Instead, the focus will continue to be on developing resilient frameworks built on precision: narrowly tailored disclosure requirements to ensure transparency, clear safe harbors to protect satire and parody, and the pursuit of a harmonized federal baseline to overcome fragmented state-level responses.

This endeavor will test the ingenuity of lawmakers, the interpretative wisdom of courts, and the responsibility of online platforms. The delicate balance involves empowering individuals and campaigns to distinguish truth from fabrication, holding malicious actors accountable, and fostering a public discourse grounded in trust. The risk of continued legal lag is profound—it could further erode confidence in our political communications, making it ever harder to discern reality and ultimately weakening the foundations of our democratic processes.

The battle against misleading synthetic media isn't just a legal one; it's a societal imperative to safeguard truth and trust in a world increasingly shaped by algorithms.